About

VTplus is the market leading development and research company for integrated VR simulation systems to treat mental and behavioral disorders, like the fear of heights or social anxieties. For more information, please visit the official website at http://www.vtplus.eu .

I have been working for VTplus as a working student in VR development since late 2019. I have been involved in development for their general VR simulation system, the OPTAPEB project, VirtualNoPain and REHALITY. This page showcases some of the work I have done at VTplus.

The entries on this page are shown in chronological order, from most recent to oldest.

My Role: VR Developer (Generalist)

May 2019 – Sept. 2019: Intern

Nov. 2019 – March 2024: Working Student

AI System for automatic Door detection, opening, and walking through for NPCs

I created a System for our NPCs which allows them to automatically detect doors in their path, recognize whether they are open or not, and then opening them if needed to pass through.

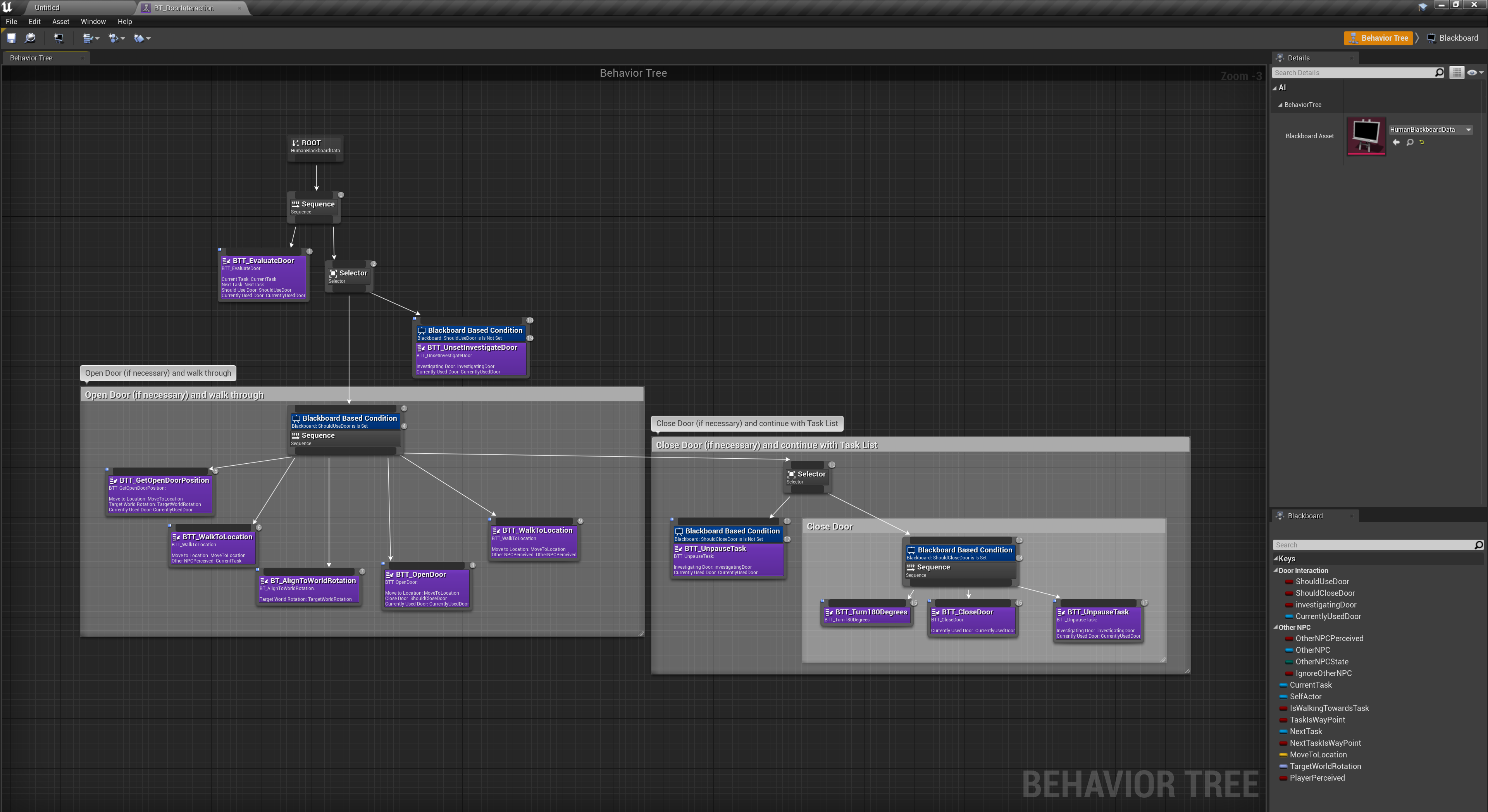

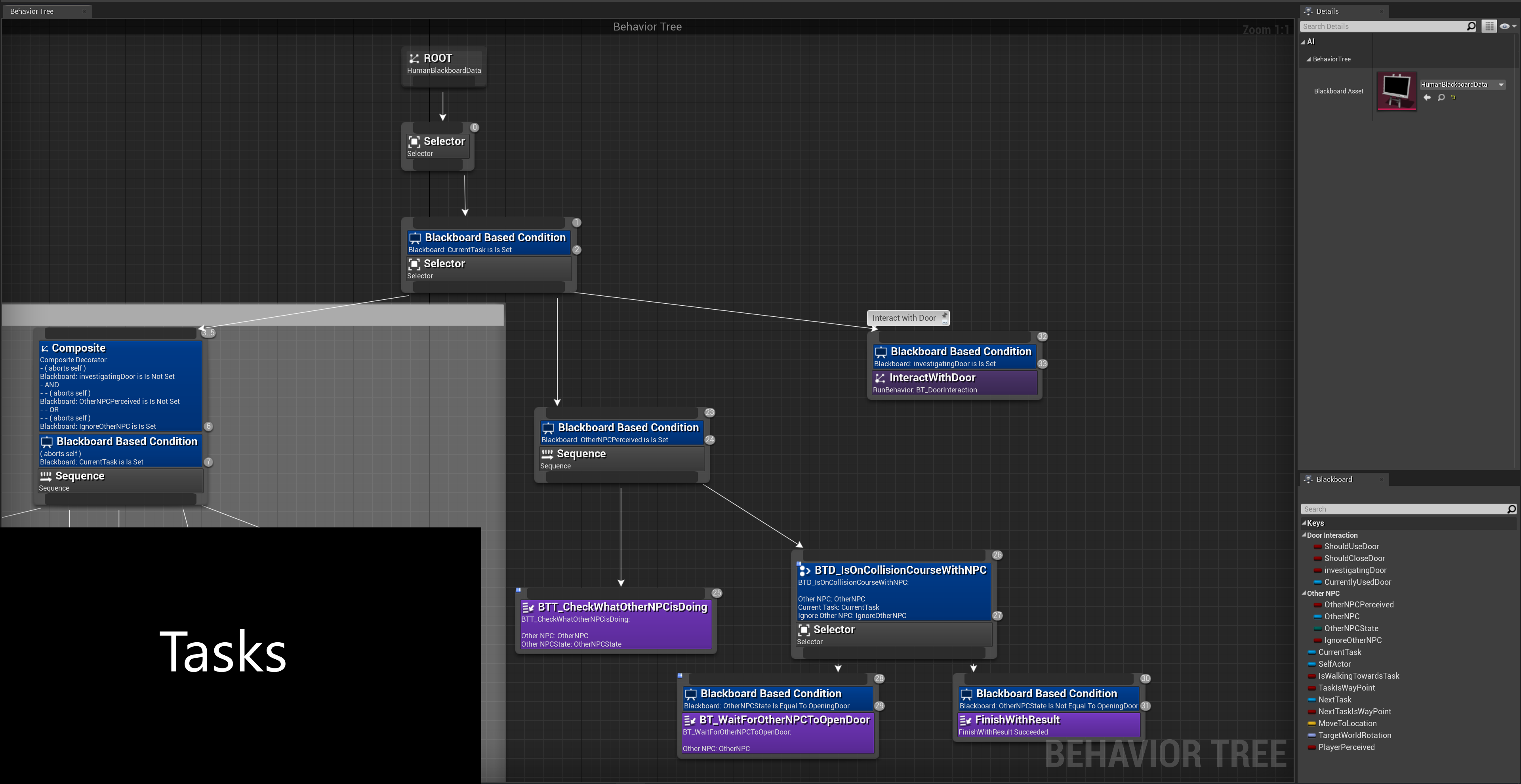

The system consists of two main parts: The doors themselves and the NPC’s behaviour tree. This system has been implemented using Unreal Engine’s Blueprint Scripting system.

Disclaimer: Please note that this system is still a work in progress, and which still needs visual polish. Footage shown here does not represent the final product

How It Works

Doors:

The doors possess a trigger volume that can be detected by the NPCs. The doors also have two in-editor adjustable points the NPCs will move to to either open or close the door.

The doors also hold a reference to animations that should be played when opening and colsing them. This allows for having correct opening animations depending on the doors size and style.

Behaviour Tree:

The basis of our NPC behaviour is our Task-System, which allows NPCs to move to a specific location and one arrived, play an animation or trigger a Level Sequence. My Door-Opening System was designed to seemlessly integrate into the Task-System and was implemented in a sub-tree.

If an NPCs enters the Trigger Volume of a door while walking towards a Task, they will perform a capsule trace between their current location and the location of the Task they are walking to. If the trace hits the door, they will then evaluate if the door is already open or not. If it is open, they will simply walk through it and continue on their path. If not, they will move to the location from which to open the door from and open it. Once the door is open, they will pass through, move to the door closing location and close it. Then, they will continue walking towards the Task.

Furthermore, the NPCs will also periodically check if another NPC is crossing their path. Once they detected another NPC, they will evaluate what they are doing. If the other NPC is simply walking or standing there, they will try to avoid bumping into them using Unreal’s build in RVO system. If the other NPC is currently in the process of interacting with a door, they will instead wait for the other NPC to have finished their interaction before interacting with the door themselves.

To allow for more control, NPCs can also be instructed to never use doors and the doors themselves can also be set to be not usable by NPCs. Futhermore, a simplyfied Behaviour tree can also be used that does not include the Door-System. This was designed for scenarios where some NPCs will pass by doors on their path, but should never enter through any doors, and also for scenarios where no doors are present.

My Role: AI Programmer

VirtualNoPain

The VirtualNoPain research association aims to provide a safe way for patients with chronic pain to control their brain activity via neurofeedback training, in order to alliviate their pain.

More Information can be found here: https://www.uni-wuerzburg.de/aktuelles/pressemitteilungen/single/news/schmerzfrei-dank-virtual-reality/

My Contributions

I created methods for visualizing the brain activity of the patients using the following particle effects and other visualization methods. The data is streamed into Unreal Engine via a plug-in and then fed into the blueprints and shaders I made.

I created a leaves particle system which direction an acceleration can be controlled with streamed in data, as well as a foliage shader that simulates movement in the wind depending on the same streamed in data.

I have also created a Niagara particle system of butterflies that are used in one of the scenarios. I created the model and textures of the butterfly and animated it via vertex animation.

Furthermore, I designed and implemented large parts of the GUI used by the Therapists using our in-house library for GUI creation using Typescript.

My Role: Material Artist, VFX Artist, UI Designer, UI Programmer, Scenario Programmer, QA Tester

Alcohol Adiction Scenario Prototype

At VTplus, we also develop scenarios that can be used in treating addiction problems such as an addiction to nicotine or most recently, an addiction to alcohol. I was tasked with creating a first protoype of this scenario, based on one of our nicotine addiction scenarios.

My Contributions

I work mostly consisted of writing new shot dialogs that can be used to trigger a desire for alcohol, to help train to withstand this desire. I then created multiple short sequences playing these dialog bits together with appropriate animations. These sequences can be played indivually or strung together to create a longer sequence, to serve as a proof-of-concept for this new scenario.

My Role: Dialog Writer, Animator, Programmer

REHALITY

The REHALITY project aims to aide in the rehabilitation of stroke patients. It is being developed in cooperation with the neurological university clinic Thübingen and the Hochschule der Medien.

Disclaimer: Footage was taken while the REHALITY project was still ongoing, thus everything showcased here is work in progress footage and does not represent the final product.

My Contributions

I have worked on multiple subsystems, including a prototypical implementation of the mirror therapy, customization options for the user’s avatar like changing their skin color. My most prominent contribution is a puzzle mini game using eye-tracking as its primary control option, but also providing other methods of input like a stream of EEG data. There are multiple different motives to choose from, and it is also possible to create puzzles with more pieces. The hand that should be used to pick up a piece can be chosen via different criteria. It also includes different user feedback modalities, consisting of visual feedback via a particle system and a count-up when pieces have been placed correctly, as well as auditory and sensory feedback. I have also created a short tutorial explaning how the game is played, what the different interaction symbols mean and how to use them.

My Role: Gameplay Designer, Gameplay Programmer, Animator, Rigger, UI Designer, UI Programmer, QA Tester

General work for VTplus’ systems

VTplus offers a wide variety of virtual reality therapy systems, ranging from training simulations for the fear of heights, to training systems for social anxieties and exposure therapy for the fear of spiders.

My Contributions

My work at VTplus ranges from helping in the creation and augmentation of some subsystems in Unreal Engine 4, like the AI and animation system of the Non-Player Characters, an in-engin Control Rig using Unreal’s Control Rig Plugin, to rigging and animating characters in Blender, GUI scripting using our in-house Typescript library, as well as testing and documenting the system and bug-fixing.

My Role: Generalist; Programmer (general processes and gameplay, AI, UI), Animator, Rigger, QA Tester, Prop Artist, Material Artist

All content on this page is © VTplus GmbH